Top 10 Data Protection Tips for SMEs

Is it onerous for SMEs to become compliant?

One of the stated aims of the UK Government’s Data Protection and Digital Information Bill is to support small businesses and remove unnecessary bureaucracy.

As context, there are 5.6m businesses in UK of which SMEs (less than 250 employees) represents 99% of the total. According to IAPP research approximately 32,000 organisations in UK have a registered DPO. It’s right, therefore, to focus on SMEs.

But how onerous is small business data protection now? Arguably, the answer is, not as onerous as you might think. We’ve created a top 10 checklist for start-ups and small businesses to help you decide what you should be concerned with:

1. Do I need to worry about data protection regulation?

Yes. Pretty much any business processing personal data for commercial purposes need to worry about data protection. (It does not apply to purely ‘personal or household activity’). Having said that, the law and regulatory advice focuses on taking a ‘proportionate’ approach. There’s no one size fits all and it will depend on the risk appetite of your organisation.

2. Do I need a DPO?

Probably not. If the answer to these three questions is no, you don’t need a DPO…

- Are you a public authority or body?

- Do your core business activities require regular and systematic monitoring of individuals on a large scale?

- Do your core business activities involve processing on a large scale ‘special category data’, or criminal convictions or offences data?

Even if you don’t need a DPO, it’s wise to nominate someone in your organisation as a data protection lead. This does not need to be a full-time role. Alternatively, you can outsource this activity to someone/a company who can provide the support on a part-time basis.

3. Do I need a RoPA (Record of Processing Activity)

Maybe. There’s no escaping the fact RoPAs are challenging documents to complete and can absorb a huge amount of time. Companies with more than 250 employees must always keep a RoPA – that’s just under 8,000 businesses in UK.

If you have less than 250 employees, you don’t need a RoPA if the following applies:

- Processing does not pose a risk to the rights and freedoms of the data subject

- No special category data is being processed

- If the processing is only done occasionally

The debate start when you consider what constitutes a ‘risk to the rights and freedom of the data subject’. It’s worth considering the type of data you handle rather than the volumes to help you decide whether to complete a RoPA. As a start up, you may not need a RoPA as defined in the legislation. However, having a record of what information is processed, for what purpose and under what lawful basis is a good idea even if the ICO RoPA form is not.

There are changes afoot with regards to the RoPA under UK data reform plans, but a record of your activities may still be necessary, just not as current prescribed.

4. Do I need to register with ICO?

Almost certainly YES. The ICO asks all businesses that process personal data to pay the Data Protection Fee. This is used to fund the ICO and its activities. This isn’t onerous. In fact, most small businesses will only have to pay £40 (or £35 with a direct debit). And that’s before you’ve considered whether you’re exempt. Not for profit status is a possible example.

5. Do I need a privacy notice (policy)?

Yes. A privacy notice is a foundational piece of your data protection work. Any organisation which processes personal data needs to set out what data they are processing and how they are processing it as well as the data subject’s rights. The ICO’s checklist provides very clear guidance for what must be in a notice and what might be in a notice.

6. How about a cookie notice?

Yes again. If you have a website, assume you need a cookie notice. Even if all you’re doing is using cookies to manage the performance of your website, a cookie notice is required. This does not need to cost money. You can get free software from the major privacy software providers. They have simple step by step set up guides. There is really no excuse not to have a cookie notice.

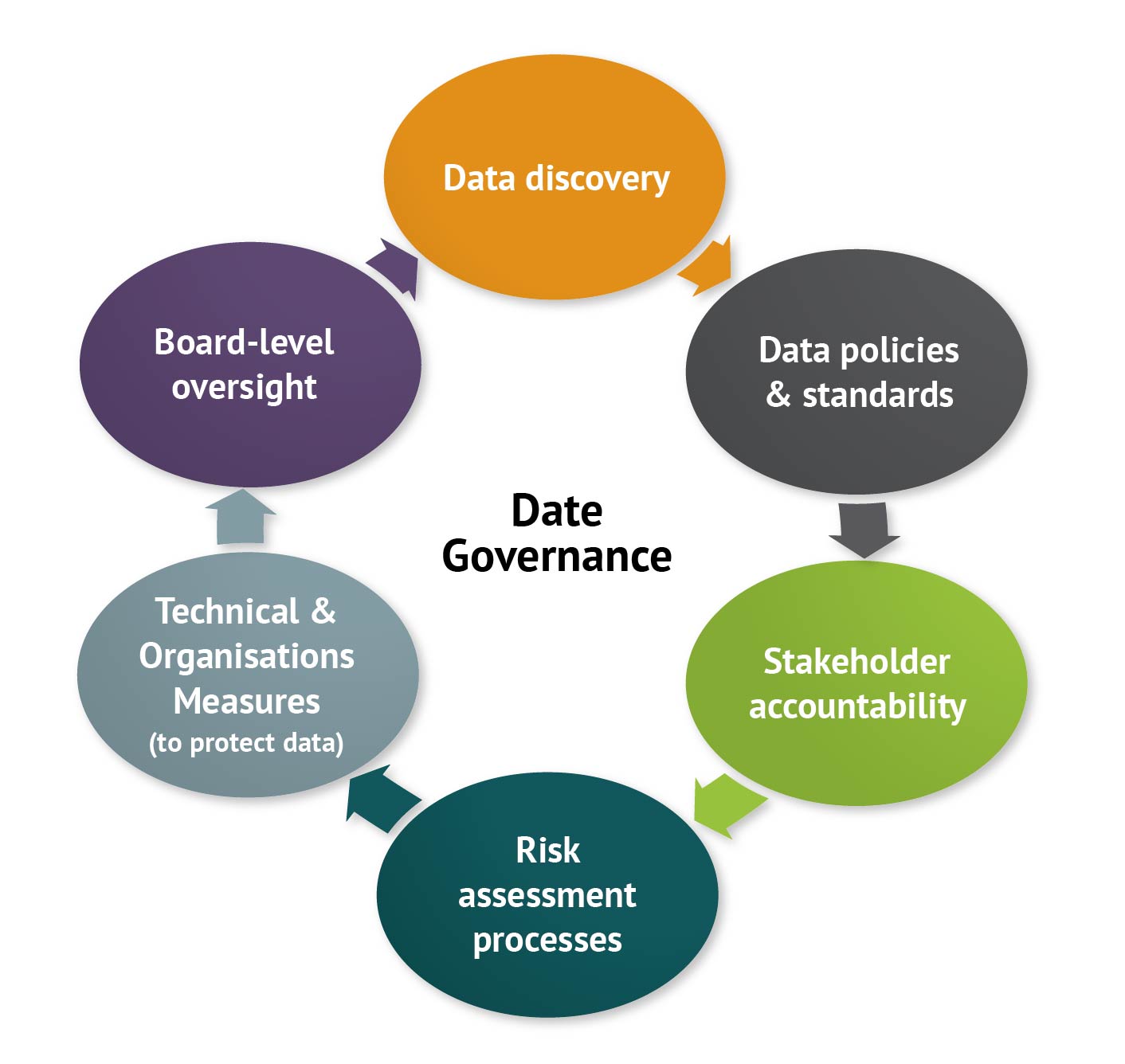

7. What about accountability?

Yes, but make it proportionate. In a nutshell, accountability means ‘evidencing your activities’. Keep a record of what you do, why you’re doing it and your decision-making. It also means making sure you have appropriate technical and organisational measures in place to protect personal data. Have staff been adequately trained in data protection? Do we have clear guidelines and/or policies to help them?

8. What about Individual Rights?

Yes. Every individual has clear rights and irrespective of the size of the organisation you need to fulfil these requests.

These rights include right of access, the right to rectification, the right to erasure, the right to restrict processing, the right to data portability, the right to object and the right not to be subject to a decision based solely on automated processing.

Not all of these might apply to a small business but it’s important to decide how to recognise and respond to these requests from individuals.

9. Don’t forget information security

Yes. Cyber Essentials was designed for SMEs. Arguably it’s the absolute minimum for any business. It does cost money but not a lot. Gaining the Cyber Essentials certification (if self-certified) costs £300. The five technical controls are:

- Boundary firewalls and internet gateways

- Secure configuration.

- Access control.

- Malware protection.

- Patch management.

10. What about International Data Transfers?

Hopefully no! If you and your suppliers are only operating in UK and Europe stop reading now. However, if any data is exported to a third country (such as USA, South Africa or India), there’s no escaping the fact that international data transfers can be painful to work through.

When EU-US Privacy Shield was invalidated in 2020 this caused significant problems for data transfers between US and EU/UK. At the time, Max Schrems’ advice was to only work with companies based in UK or Europe who are not exporting data to third countries. However, this isn’t always possible – just consider how many people use Google, Microsoft or Mailchimp.

Many, if not most, businesses will have dealings with these three and the reality is that you must accept they’re not going to change anything for you, or choose not to use them.

Conclusion

Many small and start-up businesses can get ready relatively quickly. The trick for small business data protection is to review your arrangements on a regular basis and be aware if any more complicated processing emerges. For instance, anything involving automated processing, special category data, AI or children’s data carries significant risk and should be treated with care.

There’s more helpful information available on the ICO’s Small Business Hub.