Guide to identifying and managing data protection risks

Data protection risks come in all shapes, sizes and potential severities. We need to be able to identify the risks associated with our use of personal data, manage them and where necessary put appropriate measures in place to tackle them.

How can we make sure good risk management practices are embedded in our organisation? In this short guide we cover the key areas to focus on to make sure you’re alert to, and aware of risks.

1. Assign roles and responsibilities

Organisations can’t begin to identify and tackle data risks without clear roles and responsibilities covering personal data. Our people need to know who is accountable and responsible for the personal data we hold and the processing we carry out.

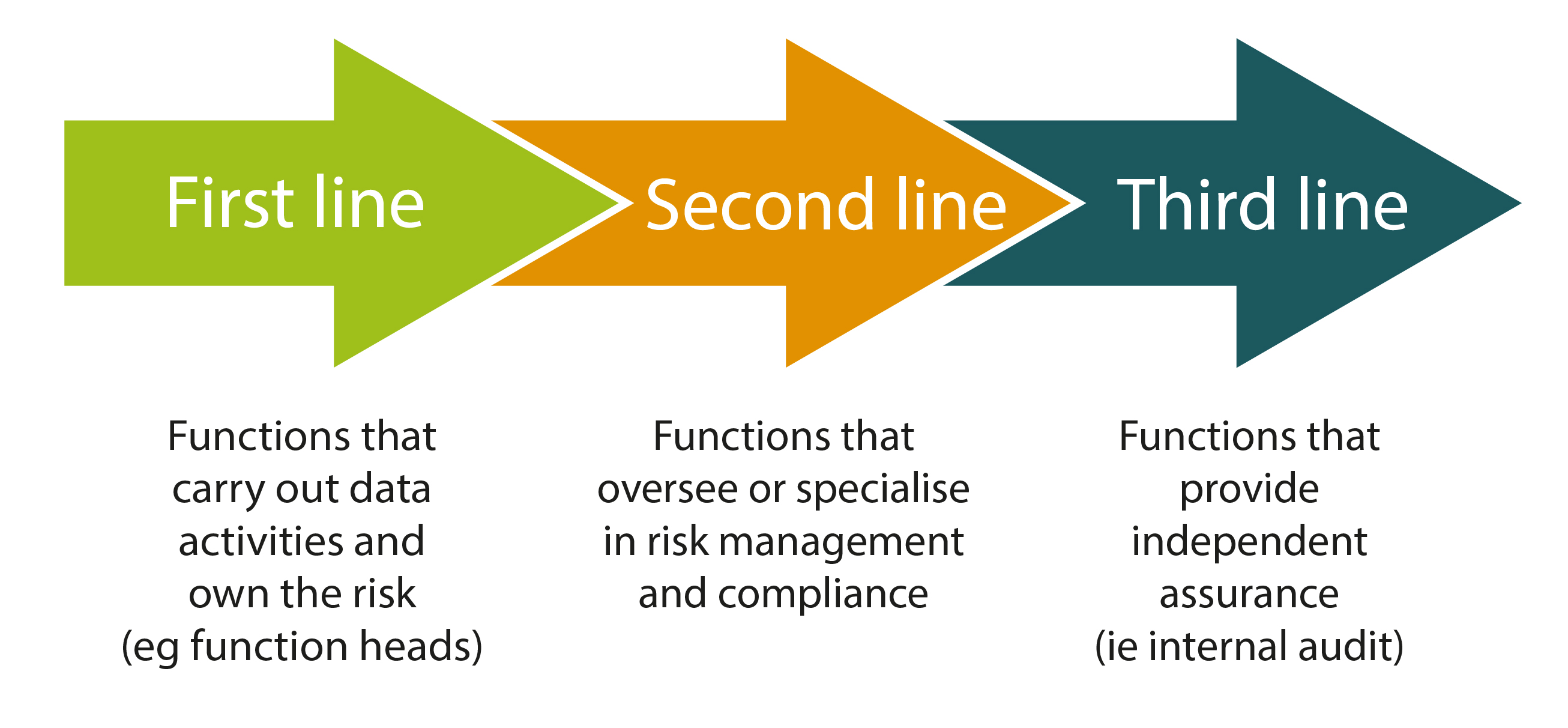

Many organisations apply a ‘three lines of defence’ (3LoD) model for risk management. This model is not only used for data protection, but is also effective for handling many other types of risk a business may face.

- 1st line: where the leaders of the business functions that process data are appointed as ‘Information Asset Owners’ and they ‘own’ the risks from their function’s data processing activities.

- 2nd line: Specialists like the DPO, CISO & Legal Counsel support and advise these the 1st line, helping them understand their obligations under data laws, so they can make well informed decisions about how best to tackle any privacy risks. They provide clear procedures for the 1st line to follow.

- 3rd line: An internal or external audit function provides independent assurance.

For example, risk owners, acting under advice from a Data Protection Officer or Chief Privacy Officer, must make sure appropriate technical and organisational measures are in place to protect the personal data they’re accountable for.

In this model, the second line of defence should never become risk owners. Their role is to provide advice and support to the first line risk owners. They should try to remain independent and not actually make decisions on behalf of their first line colleagues.

2. Decide if you should appoint a DPO

Under the GDPR, a Data Protection Officer’s job is to inform their organisation about data protection obligations and advise the organisation on risks relating their processing of personal data.

The law tells us you need to appoint a DPO if your organisation is a Controller or Processor and one or more of the following applies:

- you are a public authority or body (except for courts acting in their judicial capacity); or

- your core activities require large-scale, regular and systematic monitoring of individuals (for example, online behaviour tracking); or

- your core activities consist of large-scale processing of special categories of data or data relating to criminal convictions and offences.

In reality, most small organisations are unlikely to fall under the current UK or EU GDPR requirements to appoint a DPO. In fact, many medium-sized business won’t necessarily need a DPO either. Find out more in our DPO myth buster.

3. Conduct data mapping & record keeping

Mapping your data and creating a Record of Processing Activities (RoPA) is widely seen as the best foundation for any successful privacy programme. After all, how can you properly look after people’s data if you don’t have a good handle on what personal data you hold, where it’s located, what purposes it’s used for and how it’s secured?

Even smaller organisations, which may benefit from an exemption from creating a full RoPA, still have basic record keeping responsibilities which should not be overlooked and could still prove very useful. Also see Why is data mapping so crucial?

4. Identify processing risks

Under data protection laws, identifying and mitigating risks to individuals (e.g. employees, customers, patients, clients etc) is paramount.

Risks could materialise in the event of a data breach, failure to fulfil individual privacy rights (such as a Data Subject Access Request), complaints, regulatory scrutiny, compensation demands or even class actions.

We should recognise our service and technology providers, who may handle personal data on our behalf, could be a risk area. For example, they might suffer a data breach and our data could be affected, or they might not adhere to contractual requirements.

It’s good to be mindful about commercial and reputational risks too which can arise from an organisation’s use of personal or non-personal data.

International data transfers are another are where due diligence is required to make sure these transfers are lawful, and if not, recognise that this represents a risk.

Data-driven marketing activities could also be a concern, if these activities are not fully compliant with ePrivacy rules – such as the UK’s Privacy and Electronic Communications Regulations (known as PECR). Even just one single complaint to the ICO could result in a business finding themselves facing a PECR fine and the subsequent reputational damage. GDPR, marketing & cookies guide

⏩ Data protection practitioners share tips on identify and assessing risks

5. Risk assessments

In the world of data protection, we have grown used to, or even grown tired of, the requirement to carry out a Data Protection Impact Assessment (DPIA) or a Privacy Impact Assessment (PIA) as it called in some jurisdictions.

Build in a process of assessing whether projects would benefit from a DPIA, or legally require one. DPIAs are a great way to pinpoint risks and mitigate them early on before they become a bigger problem.

The value of risk assessments in the world of data protection compliance and Quick Guide to DPIAs

6. Issues arising from poor governance or lack of data ownership

In the real world, the three lines of defence model can come under strain. Sometimes those who should take responsibility as risk owners can have slippery shoulders and refuse to take on the risks.

Some processing doesn’t seem to sit conveniently with any one person or team. Things can fall through the cracks when nobody takes responsibility for making key decisions. On these occasions a DPO might come under pressure to take risk ownership themselves. But should they push back?

Strictly speaking, DPOs shouldn’t ‘own’ data risks; their role is to inform and advise risk owners. GDPR tells us; data protection officers, whether or not they are an employee of the controller, should be in a position to perform their duties and tasks in an independent manner” (Recital 97).

The ICO, in line with European (EDPB) guidelines, says; …the DPO cannot hold a position within your organisation that leads him or her to determine the purposes and the means of the processing of personal data. At the same time, the DPO shouldn’t be expected to manage competing objectives that could result in data protection taking a secondary role to business interests.”

So, if the DPO takes ownership of an area of risk, and plays a part in deciding what measures and controls should be put in place, could they may be considered to be ‘determining the means of the processing’? This could lead to a conflict of interest when their role requires them to act independently.

Ultimately, accountability rests with the organisation. It’s the organisation which uses the data, collects the data and runs with it. Not the DPO.

7. Maintain an up-to-date risk register

When you identify a new risk it should be logged and tracked on your Data Risk Register. The ICO expects organisations to: identify and manage information risks in an appropriate risk register, which includes clear links between corporate and departmental risk registers and the risk assessment of information assets.

To do this you’ll need to integrate any outcomes from risk assessments (such as DPIAs) into your project plans, update your risk register(s) and keep these registers under continual review by the DPO or responsible individuals.